Grad-CAM

See what artificial intelligence sees or applying Grad-CAM

When developing an artificial intelligence model to classify images, the biggest challenge is often interpreting the results. Using validation data is one way, but most of the time, the trained network remains a black box and we don't know, how exactly the result was obtained. Grad-CAM offers you a way to see what your AI sees.

Debugging Tensorflow with Grad-CAM Heatmaps

Machine learning has greatly improved the quality of automated image classification or object detection.

You train a model on a batch of data, then use it to predict new data. This sounds simple. In reality, lots of experimenting by adjusting parameters. And very often, a lot of data is required to create an accurate model.

The biggest problem we encountered while training our networks is how do we interpret the results?.

We know how accurate a model can predict data when looking at training performance. Also, we use validation data to measure the performance. But mostly, the trained network remains a black box and we don't know how exactly the result was achieved.

Grad-CAM

In their paper Selvaraju et al. proposed the Grad-CAM algorithm that creates

a coarse localization map highlighting important regions in the image for predicting the concept.

This means, we can see "what the network is looking at" when making a prediction. For a tutorial in python, see Keras Grad-CAM Tutorial

Applying Grad-CAM to ID classification

In our startup Fidentity, a browser-based Identification system, we use machine learning for ID classification. By analyzing Grad-CAM images, we were able to improve the quality of our neural network.

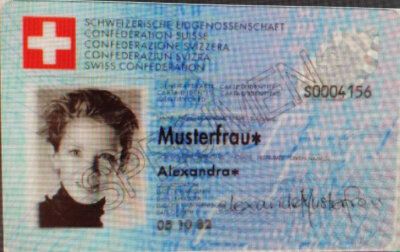

In this example, we wanted to train a model that detects artifacts that occur when someone records an ID from a computer screen (as opposed to recording it directly using their mobile phone):

We started out with training a very shallow network, then applied Grad-CAM to this model. Note that the resulting prediction ("is it a screen recording or not?") is in this case irrelevant. We want to see what regions were interesting for the model to predict the result.

In the left image, the Swiss flag in the top left corner seems to be very important for the model because it is hot yellow. In the right image, the hot zones are around the corners with no activations in the center region.

Both results are not what we want, so we added more training data and improved the network architecture. After a later iteration, the heatmap looked like this:

Here we can see that there is no activity around the face (dark) and that generally, everything else seems to have an influence on the resulting prediction (green-yellow), which is better.

Even if this model would not work, at least now we know that we are not training a Swiss flag or face detector and that we are on the right path.

Conclusion

Applying the Grad-CAM algorithm to your tensorflow models is not only very easy, it can save you a lot of time when designing your neural network architecture.

Author

Edi Spring

Geschäftsleiter, Software Architekt und Entwickler

Passionierter Trailrunner und Bierbrauer